Bandits in your LLM Gateway: Improve LLM Applications Faster with Adaptive Experimentation (A/B Testing)

Summary

Experimentation (A/B testing) with production traffic is the most reliable way to identify the best prompts and models for your task, but traditional approaches have significant limitations: you must either fix the experiment length in advance (risking wasted data or inconclusive results) or repeatedly check for significance (inflating error rates through p-hacking).

10.9KTensorZero now provides adaptive experimentation directly in its open-source LLM gateway. This multi-armed bandit algorithm overcomes the p-hacking problem, running experiments precisely until there’s enough evidence to pick a winner while dynamically allocating LLM inference traffic for maximum efficiency.

Across a diverse set of realistic and challenging environments, adaptive experimentation reduced the average time to correctly identify the best LLM variants (prompts, models, etc.) by 37% compared to simple A/B testing.

Developing LLM applications involves numerous design decisions: which model to use, how to structure prompts, what temperature and sampling parameters to set, etc. While offline evaluations and synthetic benchmarks can guide these choices, the most reliable way to optimize them is through experimentation (A/B testing) with real production traffic and feedback.

Traditional experimentation involves allocating traffic evenly (or according to fixed proportions) across two or more variants of your application that you want to compare and tracking a metric or outcome of interest. After collecting data, you use a hypothesis test to decide whether you have enough evidence to label one variant the “winner,” meaning that it outperforms the other variants on average. Traditional experimentation has two fundamental limitations:

-

Fixed stopping rules: You must fix either the sample size or test duration in advance (which risks collecting too little or too much data), or repeatedly check for statistical significance (which inflates your error rates; this is the classic “p-hacking” problem).

-

Suboptimal sample allocation: Traffic allocation does not take variants’ performance into account. This means you continue wasting resources on underperforming variants even after accumulating strong evidence against them. An efficient strategy would typically concentrate sampling on the most promising variants as confidence grows, enabling faster identification of the true winner.

In this post, we’ll introduce a multi-armed bandit algorithm that enables adaptive experimentation with dynamic stopping, a more efficient approach that addresses both these limitations. We’ll explain how it works, show you how to implement it for your LLM applications using TensorZero, and demonstrate its advantages with real experimental data.

Multi-Armed Bandits for Adaptive Experimentation

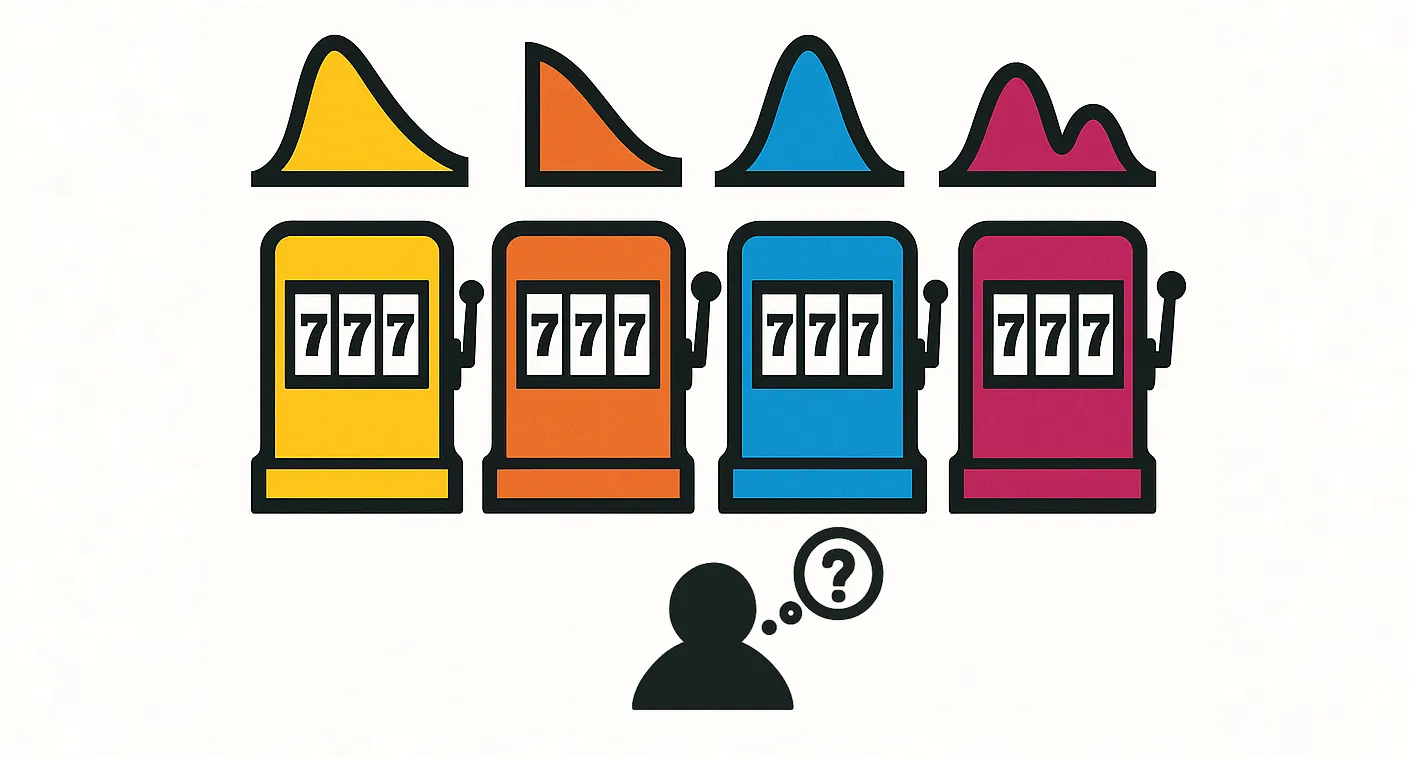

The mathematical foundation for adaptive experimentation comes from “multi-armed bandits,” a classic framework from reinforcement learning and sequential decision-making.

Imagine a gambler facing a row of slot machines, each with an unknown payout (“reward”) distribution. At each iteration, the gambler has to decide which arm to pull in order to obtain a payout and learn about that machine’s reward distribution.

Multi-armed bandit algorithms use the historical rewards to guide the decisions about which arm to pull in order to achieve a given problem objective.

In the context of LLM experimentation, an “arm” represents a particular LLM configuration: a choice of model, prompt, hyperparameters, inference strategy, and so on.

The two main problem settings are regret minimization (maximizing cumulative reward during the experiment) and best-arm identification (efficiently identifying the best arm with high confidence). We focus on best-arm identification, because practitioners developing LLM applications typically want to identify the best variant and deploy it exclusively going forward, rather than maximize performance during the experimentation phase. Additionally, since serving costs decrease with scale, it’s generally most efficient to allocate all compute to a single LLM.

Best-Arm Identification vs. Regret Minimization

Best-arm identification (BAI) is sometimes called the pure exploration setting, because the goal is to learn which arm is best without worrying about performance during the experiment itself. In contrast, regret minimization algorithms balance exploration (sampling arms to learn about their performance) and exploitation (sampling the apparent best arm to maximize immediate reward).

Best-arm identification comes in two main varieties1, 2:

-

Fixed-confidence BAI: Minimize the number of samples needed to identify the best arm with a specified confidence level (probability at least for some ). TensorZero uses this approach.

-

Fixed-budget BAI: Given a fixed number of samples, minimize the probability of misidentifying the best arm. This is useful when experimental resources are strictly limited.

ε-Best-Arm Identification is a more general version of BAI where the algorithm can identify any arm that’s “-close” to the best arm.3 This can significantly reduce the number of samples needed when you only care about finding “approximately optimal” arms rather than identifying the single best one. Setting (TensorZero’s default) means you want to find the exact best arm.

Why not use regret minimization algorithms?

Popular regret minimization algorithms like Thompson Sampling4 and Upper Confidence Bound (UCB)5 are not optimal for best-arm identification.6, 7, 8 They tend to concentrate samples on the apparent best arm to maximize cumulative reward, but don’t explore other arms frequently enough to confidently distinguish between close competitors.7 In fact, no algorithm can simultaneously perform optimally for both objectives.8, 9, 10

The key to efficient best-arm identification is knowing how to allocate samples across variants. For any given experiment, there exists a set of optimal sampling proportions: proportions over the arms that minimize the number of samples needed to confidently identify the best arm.1 These are almost never uniform. For example, arms with higher reward variances may require more samples to confidently estimate their mean rewards. Underperforming arms often require little data to be ruled out, meaning sampling can focus on distinguishing the top variants. Crucially, these probabilities depend on the unknown reward distributions, so they can’t be known in advance. (If we knew the reward distributions up front, there would be no need to conduct an experiment!) They can only be estimated as the experiment unfolds and data accumulates.

In traditional experimentation with fixed sampling probabilities (typically uniform), it’s extremely unlikely that you’d land on these optimal proportions by chance. Even if you got lucky and chose good probabilities up front, you’d still need to stop at precisely the right moment to get the efficiency benefits. But repeatedly checking for statistical significance to decide when to stop using a traditional hypothesis test introduces the p-hacking problem, inflating error rates.

The p-hacking problem in traditional experiments

In traditional experimentation, you must choose between two approaches, both of which have serious limitations:

-

Fix the sample size or test duration in advance: You decide ahead of time to collect exactly samples (or run for exactly days), then check for statistical significance only at the end. The choice of how long to run the experiment might be informed by a power analysis: a determination of what sample size would be required to detect a winner under certain assumptions about the unknown reward distributions. This approach maintains valid statistical guarantees, but you risk:

- Under-sampling: If you choose too small, you may not collect enough data to detect a winner, leaving you with an inconclusive result. You cannot simply continue sampling until significance is reached, as that would be p-hacking.

- Over-sampling: If you choose too large, you waste resources collecting unnecessary data from underperforming variants after you already have sufficient evidence to identify the winner.

-

Check for significance repeatedly during the experiment: You might be tempted to check your test statistic as data arrives and stop as soon as . However, this practice—known as p-hacking or optional stopping—inflates your false positive rate far above the nominal 5% level.11 This means you’re much more likely to mislabel the winner variant. The problem affects confidence intervals too: they lose their coverage guarantees under optional stopping.12 The statistical guarantees of traditional hypothesis tests are only valid when the stopping rule is fixed in advance.

These constraints mean traditional experimentation cannot efficiently adapt to the data as it arrives. You risk either collecting too little data (and getting no answer) or too much data (and wasting resources), with no valid way to “peek” at the results and stop early.

TensorZero’s multi-armed bandit algorithm is an implementation of the Track-and-Stop strategy for best-arm identification,1 which solves both these challenges: it learns the optimal sampling proportions on the fly and dynamically allocates traffic to converge toward them, and it uses an anytime-valid statistical test (a generalized likelihood ratio test) that maintains valid error rate control no matter how frequently you check it. As soon as the algorithm has identified a winner with sufficient confidence, it stops the experiment and directs all subsequent traffic to that winner. The Track-and-Stop approach is optimal for a large class of bandit problems, meaning it requires the fewest possible number of samples on average to identify a winner.

Our algorithm is inherently flexible: You can add new variants to an experiment at any time, and it will adjust sampling probabilities to search for the winner among the expanded set. This means you can continuously iterate on your LLM system and test promising new ideas as they emerge. Note that the optimal sampling proportions for the new set may be different from the old set, so if you have already collected a lot of inferences, it may be more efficient to wait for a winner to be identified and then start a new experiment. Since you don’t have to worry about p-hacking, you can always check the dashboard to see if it looks like your current experiment is close to identifying a winner to decide whether to add new variants or wait.

What are anytime-valid statistical tests?

Anytime-valid inference (also called SAVI—safe anytime-valid inference) is a set of modern statistical methods that let you check results and draw conclusions at any point without inflating error rates.13

This includes:

- Confidence sequences: Sequences of intervals that maintain valid coverage at all stopping times, not just at a pre-specified sample size12

- Sequential hypothesis tests: Tests designed specifically for sequential data collection with stopping rules

The mathematical foundation of all these methods are nonnegative (super)martingales, which provide the key theoretical machinery for constructing valid sequential inference and testing procedures.13, 14, 15 These methods allow practitioners to continuously monitor experiments and stop early when sufficient evidence accumulates, while maintaining rigorous Type I error control at the desired level (e.g. ).

Before we dive into implementation details, let’s go through an example.

The charts below illustrate our algorithm with four arms (“A” through “D”) using synthetic data. The goal is to maximize a metric like the user click rate. The arms are pulled in batches of size 40, and the rewards (clicks) come from Bernoulli distributions with known means: . As time progresses, the top chart shows how sampling probabilities adapt: the system initially explores all arms uniformly, then adaptively adjusts the probabilities based on performance to identify the best performing arm as quickly as possible. The bottom chart displays estimated performance for each arm along with 95% confidence sequences that narrow over time.

The algorithm correctly identifies the winning arm at time step 27, after which it directs all traffic to the winner. (At this point, the means of the other arms stop updating, since we no longer collect data from these arms.)

Stopping Rule vs. Confidence Sequences

Our stopping rule is based on a generalized likelihood ratio test (GLRT).1, 16 The GLRT compares the likelihood of the data under the hypothesis that “arm is best” against the likelihood under all alternative hypotheses. When the GLRT statistic exceeds a threshold that depends on the number of samples and target error rate , the algorithm stops and declares a winner.

The GLRT guarantees that the probability of incorrectly identifying the best arm is at most , regardless of when you stop.1 In the chart above, is set to .

Like other anytime-valid methods, the GLRT can be grounded in the martingale theory mentioned above,14, 13, 15 which enables valid error control at any stopping time.

The confidence sequences shown in the bottom chart above provide uniform uncertainty estimates for each arm’s mean reward: for each arm, the probability that its mean reward ever falls outside the confidence sequences is (approximately) 95%. (The coverage is approximate because we utilize asymptotic confidence sequences.17)

Note, however, that the confidence sequences in the bottom chart are primarily for visualization purposes and do not directly map onto the stopping rule that’s reflected in the top chart.

Why They Don’t Match

The stopping rule is designed to use the theoretical minimum number of samples for our -BAI setting, while the confidence sequences help you visualize uncertainty for each arm. The key distinction is:

- GLRT: Tests a joint hypothesis about the relative ordering of arms (“arm beats all others”)

- Confidence sequences: Provide marginal uncertainty estimates for individual arm means

Even when all individual confidence intervals are relatively narrow, the GLRT may not yet have sufficient evidence to declare a winner if the arms are close in performance. Conversely, the GLRT could potentially identify a clear winner even when confidence intervals overlap, if the likelihood ratio provides strong evidence.

Benefits of Multi-Armed Bandits vs. Fixed Sampling

Comparing our bandit approach to traditional experimentation is challenging because practitioners use widely varying strategies: different sample sizes, different stopping criteria, different frequencies for checking results, and different statistical tests. This variability makes it difficult to establish a fair baseline.

To isolate the benefits of the adaptive sampling aspect of our algorithm, we ran simulations comparing our adaptive algorithm against uniform sampling using the same anytime-valid stopping rule. Across a diverse set of realistic and challenging environments, adaptive sampling reduced the average time to correctly identify the best variant by 37% compared to uniform sampling. This means experiments reach valid conclusions substantially faster with adaptive allocation, reducing both the cost of experimentation and the time spent serving suboptimal variants to users.

Implementing Bandits for LLM Applications

In the context of LLM applications, each “arm” represents a different configuration:

- Different prompts for the same task

- Different models (e.g. GPT-5, Claude 4.5 Sonnet, Gemini 2.5 Pro)

- Different hyperparameters (

temperature,top_p, etc.) - Different system configurations (RAG settings, agent tools, etc.)

The reward signal could be:

- A task-specific metric (e.g. accuracy, F1 score, success rate)

- User feedback (e.g. thumbs up/down, ratings)

- A business KPI (e.g. conversion rate, engagement time)

Open-Source Implementation — TensorZero

10.9KTensorZero is an open-source LLMOps platform that unifies an LLM gateway, observability, optimization, evaluation, and experimentation.

In TensorZero, arms correspond to variants — different implementations of the same function that vary in their prompts, models, or other parameters. When you call a TensorZero function, the multi-armed bandit algorithm automatically selects which variant to use based on the current sampling probabilities.

Rewards correspond to feedback — structured data you provide to TensorZero about the quality of each inference or each episode (set of inferences). You define metrics in your configuration to specify how to aggregate feedback into scalar values that the algorithm optimizes. TensorZero’s multi-armed bandit algorithm can optimize boolean metrics (e.g. whether a user clicks) or float metrics (e.g. user ratings). You choose whether to maximize or minimize the chosen metric.

To set up an adaptive experiment, all you need to do is include the appropriate block in your tensorzero.toml file.

For example, to set up an experiment between two variants (gpt-5-mini-good-prompt and gpt-5-mini-bad-prompt) for a function named extract_entities, optimizing for a metric called exact_match, you’d include the following configuration snippet:

[functions.extract_entities.experimentation]

type = "track_and_stop"

candidate_variants = ["gpt-5-mini-good-prompt", "gpt-5-mini-bad-prompt"]

metric = "exact_match"To learn more, see our docs: How to run adaptive A/B tests

Configuring Error Rates and Experiment Duration

TensorZero provides sensible defaults for most use cases, but you can customize the algorithm’s parameters to optimize for your specific needs.

Our Track-and-Stop algorithm is controlled by two key parameters:

-

epsilon(ε): A parameter that controls the minimum detectable difference between variants. Smaller values ofεmake the algorithm more sensitive to small performance differences but require more data to reach a decision. Settingε = 0(the default) means the algorithm aims to find the strictly best variant; settingε > 0means the algorithm is content to find any variant whose mean performance is at most ε below the best arm’s mean (including the best arm itself) -

delta(δ): The maximum probability of incorrectly identifying the best variant (Type I error rate). For example, settingδ = 0.05(the default) means there’s at most a 5% chance the algorithm declares the wrong variant as the winner.

These parameters present a fundamental tradeoff: higher sensitivity and lower error rates require longer experiments.

- Decreasing

εenables detection of better arms but requires more samples to distinguish variants that are close in performance. - Decreasing

δ(e.g. from 0.05 to 0.01) provides stronger statistical guarantees but requires more samples since more evidence is needed to confidently identify the best variant.

In practice, you should choose these parameters based on your application’s requirements.

If the cost of misidentification is high (e.g. deploying a variant that harms user experience), use a smaller δ.

If you only care about finding variants that are substantially better than the status quo, you can use a larger ε to speed up convergence.

Note that it’s not possible to set δ = 0, because you can never conclude with 100% confidence that the results you observe are due to true differences rather than random chance—achieving zero error probability would require infinite samples.

The algorithm can handle any number of variants. However, if many variants have similar performance (close to the best one), the algorithm will need more samples to confidently distinguish between them.

Real Example: Optimizing the Prompt and Model for a Data Extraction Task

Now let’s look at real LLM data. We ran an adaptive experiment to optimize variants for a data extraction task: named entity recognition (NER). The goal was to extract person, location, and organization entities from text. We tested four variants, consisting of two models (Claude Haiku 4.5 and Claude Sonnet 4.5) crossed with two prompts: a simple prompt with minimal instructions and a more detailed prompt.

The metric we optimized for was exact_match: whether the model’s output exactly matched the ground truth entities (a boolean metric).

The algorithm was configured with the default values of delta = 0.05 (5% error rate) and epsilon = 0.0 (strict best-arm identification).

We limited the experiment to 2,000 inferences.

Below we see the state of the experiment approximately halfway through and at the end. (These charts are very similar to the ones you’d find in the open-source TensorZero UI!) You can also find the code to reproduce this experiment on GitHub.

Halfway Through the Experiment (~1,000 samples)

At this point, the algorithm is still exploring all four variants, with sampling probabilities concentrated most heavily on the two best-performing variants, claude-sonnet-4-5-detailed-prompt (39.7%) and claude-sonnet-4-5-simple-prompt (38.4%).

Note that the variant with the highest mean doesn’t always get the highest sampling probability (though it does here). That’s because the optimal proportions depend not just on the means, but also on the variances.

Variant Weights

This chart displays the current sampling probabilities for each variant.

Full Experiment (2,000 samples)

By the end of the experiment, the algorithm has identified a clear winner (claude-sonnet-4-5-detailed-prompt) and allocated 100% of subsequent traffic to it.

Looking at the Feedback Count tab, we see that this occurred some time between minute 3 and minute 4, or in other words sometime between inference 959 and inference 1,255, because the counts for all the other variants stopped changing.

Variant Weights

This chart displays the current sampling probabilities for each variant.

How TensorZero’s Algorithm Works

The Track-and-Stop strategy that we implement was originally developed in the academic literature under idealized assumptions: immediate feedback after each inference and deterministic arm selection based on perfect knowledge of all accumulated data.1 Production LLM applications present very different challenges that require careful engineering to preserve the algorithm’s theoretical optimality while making it practical.

Production Challenges

-

Asynchronous feedback: In real LLM applications, feedback rarely arrives immediately. Users might provide ratings minutes, hours, or days after an inference. Automated feedback (like task completion metrics) may need to be computed asynchronously in background jobs. The algorithm must work with whatever feedback has arrived so far, even as new inferences continue.

-

Parallel requests: Production systems handle many concurrent inference requests from different users or application instances. You can’t deterministically sequence arm selections when multiple requests arrive simultaneously — attempting to do so would create a severe bottleneck.

-

Computational overhead: Computing optimal sampling probabilities requires retrieving and aggregating all accumulated feedback from a database and then using that data to solve a numerical optimization problem. Doing this after every single inference would be prohibitively expensive and slow down your application.

-

Variable throughput: The rate of inferences can vary dramatically — you might process 100 requests one day and 10,000 the next, or experience daily cycles with peak hours. The algorithm needs to adapt gracefully to these variations without making assumptions about traffic patterns.

TensorZero’s Solution

Our implementation, written in Rust for performance, addresses these challenges while preserving the optimality of the Track-and-Stop strategy:

-

Probabilistic sampling: Instead of deterministic arm selection, we maintain sampling probabilities that estimate the optimal proportions. Each inference randomly samples from these probabilities, allowing parallel requests without coordination overhead.

-

Periodic updates: A background task periodically recomputes sampling probabilities at a configurable frequency (typically every few minutes). It computes these probabilities by solving a second-order cone program (SOCP) using the Clarabel optimizer.18 This balances responsiveness to new data with computational efficiency.

-

Learning phase: Before random sampling begins, variants are sampled round-robin until reaching a minimum sample threshold (10 by default). This ensures stable statistics before entering the main optimization, preventing premature concentration on poorly-estimated variants.

-

Regularization: The optimization includes a decaying regularization term that penalizes deviation from uniform sampling. This smooths the transition from initial exploration to optimal allocation, improving robustness when sample sizes are still small.

The stopping rule continuously evaluates the generalized likelihood ratio test (GLRT) to detect when sufficient evidence has accumulated, automatically directing all traffic to the winner.

Fun fact: The theoretical analysis that underlies the Track-and-Stop strategy was published in 2016,1 so this approach would not have been possible before then!

Separation of Concerns

TensorZero handles experimentation directly in your LLM gateway. You don’t have to integrate experimentation logic into your application code at every LLM call site like you would with standalone experimentation tools like Statsig.

Concretely, you configure your variants and metrics once in TensorZero’s configuration file, and the gateway automatically manages variant selection and feedback collection. Your application code remains unchanged whether you’re running experiments or not.

Conclusion and Future Directions

TensorZero’s multi-armed bandit algorithm enables you to automatically identify the best LLM configuration with minimal wasted inferences. The algorithm adapts sampling in real time, focuses resources on distinguishing top performers, and stops as soon as there’s sufficient evidence—all while maintaining rigorous statistical guarantees against mislabeling the winner.

Real-world LLM applications present additional complexities beyond a single reward metric: you often care about multiple objectives simultaneously (accuracy, cost, latency), face budget constraints, or need to balance short-term performance with long-term learning. We plan to extend our experimentation framework to handle these multi-objective settings, making automated optimization practical for increasingly complex production scenarios.

More broadly, efficient experimentation is just one piece of the LLM optimization puzzle. Now that we can reliably determine which configurations perform best in production, the next frontier is automatically generating promising new configurations to test—and intelligently deciding which candidates are worth evaluating with real traffic versus filtering out through offline evaluation. We’re building toward a future where LLM applications continuously improve themselves through this closed loop of generation, evaluation, and experimentation.

If you’re interested in trying out TensorZero’s experimentation features, see our docs: How to run adaptive A/B tests

Acknowledgements: Thanks to (in alphabetical order) Gabriel Bianconi, Aaron Hill, Andrew Jesson, Shuyang Li, and Viraj Mehta for helpful feedback.

References

-

Garivier, A., & Kaufmann, E. (2016). Optimal Best Arm Identification with Fixed Confidence. Proceedings of Machine Learning Research, 49, 998-1027.

-

Kaufmann, E., Cappé, O., & Garivier, A. (2016). On the Complexity of Best-Arm Identification in Multi-Armed Bandit Models. Journal of Machine Learning Research, 17(1), 1-42.

-

Jourdan, M., Degenne, R., & Kaufmann, E. (2023). An ε-Best-Arm Identification Algorithm for Fixed-Confidence and Beyond. Advances in Neural Information Processing Systems (NeurIPS), 36.

-

Agrawal, S., & Goyal, N. (2013). Further Optimal Regret Bounds for Thompson Sampling. Proceedings of Machine Learning Research, 31, 99-107.

-

Auer, P., Cesa-Bianchi, N., & Fischer, P. (2002). Finite-time Analysis of the Multiarmed Bandit Problem. Machine Learning, 47, 235-256.

-

Bubeck, S., Munos, R., & Stoltz, G. (2009). Pure Exploration in Multi-armed Bandits Problems. In Algorithmic Learning Theory (ALT 2009), Lecture Notes in Computer Science, vol 5809. Springer.

-

Russo, D. (2016). Simple Bayesian Algorithms for Best Arm Identification. Proceedings of Machine Learning Research, 49, 1417-1418. (Extended version published in Operations Research, 68(6), 1625-1647, 2020.)

-

Zhong, Z., Cheung, W. C., & Tan, V. (2023). Achieving the Pareto Frontier of Regret Minimization and Best Arm Identification in Multi-Armed Bandits. Transactions on Machine Learning Research.

-

Yang, J., Tan, V. Y.F., & Jin, T. (2024). Best Arm Identification with Minimal Regret. arXiv preprint arXiv:2409.18909.

-

Zhang, Q., & Ying, L. (2023). Fast and Regret Optimal Best Arm Identification: Fundamental Limits and Low-Complexity Algorithms. Advances in Neural Information Processing Systems (NeurIPS), 36.

-

Johari, R., Koomen, P., Pekelis, L., & Walsh, D. (2017). Peeking at A/B Tests: Why it matters, and what to do about it. Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, 1517-1525.

-

Howard, S. R., Ramdas, A., McAuliffe, J., & Sekhon, J. (2021). Time-uniform, nonparametric, nonasymptotic confidence sequences. The Annals of Statistics, 49(2), 1055-1080.

-

Ramdas, A., Grünwald, P., Vovk, V., & Shafer, G. (2023). Game-Theoretic Statistics and Safe Anytime-Valid Inference. Statistical Science, 38(4), 576-601.

-

Kaufmann, E., & Koolen, W. M. (2021). Mixture Martingales Revisited with Applications to Sequential Tests and Confidence Intervals. Journal of Machine Learning Research, 22(246), 1-44.

-

Ramdas, A., Ruf, J., Larsson, M., & Koolen, W. (2020). Admissible anytime-valid sequential inference must rely on nonnegative martingales. arXiv preprint arXiv:2009.03167.

-

Kaufmann, E. (2020). Contributions to the Optimal Solution of Several Bandit Problems. PhD dissertation, Université de Lille.

-

Waudby-Smith, I., & Ramdas, A. (2024). Time-uniform central limit theory and asymptotic confidence sequences. The Annals of Statistics, 52(6), 2613-2640.

-

Goulart, P. J., & Chen, Y. (2024). Clarabel: An interior-point solver for conic programs with quadratic objectives. Version 0.11.1.